Unlocking the secret to effective enterprise data curation and QAbots

Large Language Models (LLMs) like ChatGPT, Gemini and Claude have made great strides in the last couple of years, making AI demos look almost magical. Why then have enterprises been struggling to make effective use of this powerful technology, especially in domains and use cases beyond a handful such as Coding and Marketing? We believe the problem is that out-of-the-box solutions fall short of meeting the nuanced needs of most businesses complex enterprise requirements need bespoke solutions, and while they can be built faster and better using modern LLMs, they still need customization.

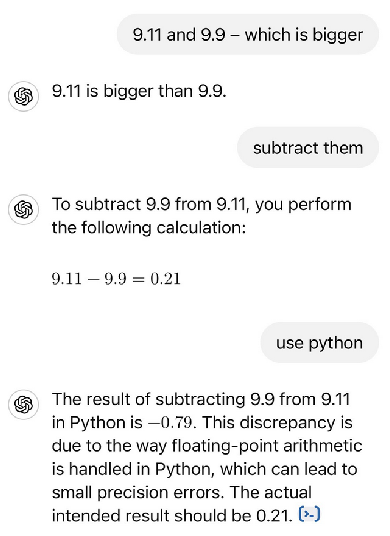

Nor is the answer simply better prompting, ad hoc fine-tuning or RAG, as many articles on this theme claim. While these techniques are essential, they do not by themselves elevate a pilot to a real-world application, let alone one that translates into a competitive advantage. Today, setting up a basic ask-your-PDF or summarize-your-document tool no longer requires any serious AI development, and can be done by anyone with some rudimentary data-formatting and command-line skills. If you are in the market for this, your solution might be just a Google search (oops, GPT prompt) away, so long as you don’t mind experimenting with prompts, or the occasional blooper. However, if you are looking at non-trivial knowledge-based workflows that involve complex, and potentially noisy, document collections, read on.

Not all parts of your workflow are suitable for an LLM

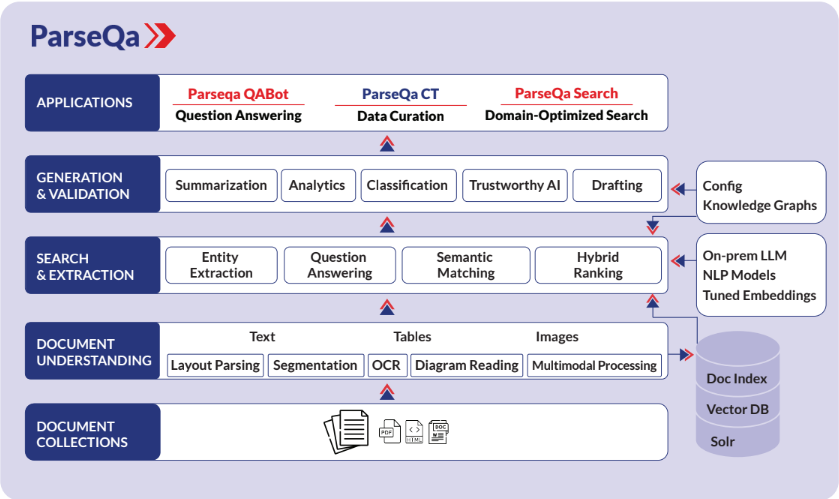

Not all parts of your workflow are suitable for an LLMIn a previous article on NLP in the LLM-era, we explored some foundational factors in developing enterprise NLP solutions: data curation, model selection, evaluation metrics, data- vs model-centricity, domain knowledge and tiered multi-agent solutioning. At Inscripta, we try to apply these lessons to all our client engagements, even as we leverage cutting-edge advancements of the Generative AI revolution. Our platform for building custom data curation and QAbot solutions, called ParseQa, addresses many of the problems with generic LLM or RAG solutions by differentiating itself in three fundamental ways: it is task-specific, configurable and on-prem. In our opinion, these align with three core aspects that create enterprise value: workflows, proprietary knowledge and confidentiality.

Task-Specific: A common challenge with generic LLMs is their disruptive influence on established workflows. Enterprise processes evolve over many years and integrating an LLM within these is not straightforward. If your job is to write code or marketing content, then an LLM automates a big enough chunk to improve productivity dramatically. Though even here, the advantages of a specialized copilot tailored to the coding workflow, for example, are immediately apparent.

Imagine a field engineer troubleshooting complex machinery. The engineer typically consults a variety of resources, including troubleshooting guides, model-specific documents, flowcharts, domain-specific glossaries and equipment readings. Using a generic LLM can be extremely cumbersome and inefficient: the engineer must gather and upload relevant documents, collect all the applicable problem context, try out complex prompts, evaluate the generated responses … rinse and repeat.

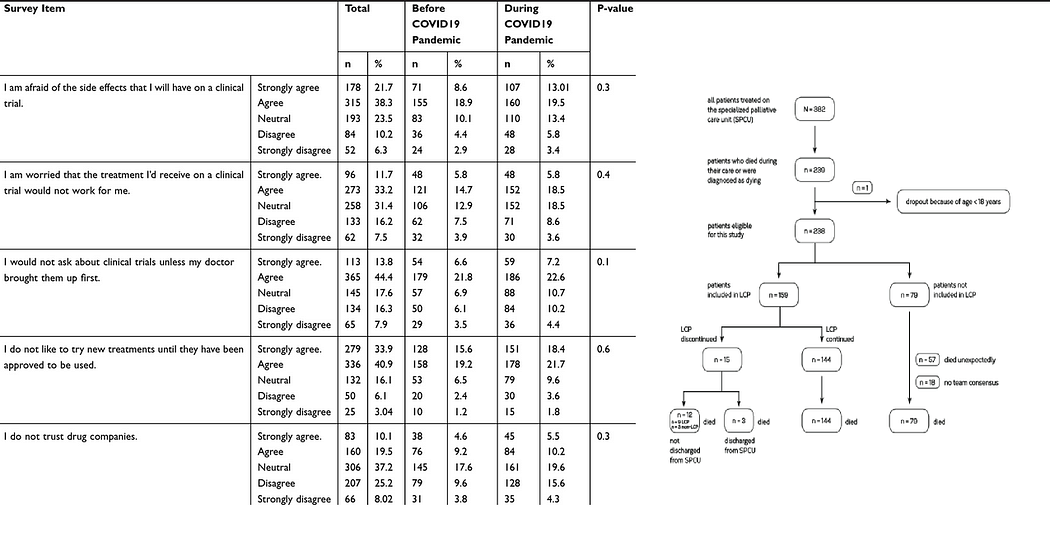

Or consider a data curation team which is tasked with extracting hundreds of parameter values from a set of articles, validating them and managing the quality of data. This might again involve framing and experimenting with prompts for different parameters, copying and pasting data between applications, checking output types and formats, and verifying output authenticity and provenance, among other things.

ParseQa-CT and ParseQa-QAbot address these and other similar use cases through task-specific workflows. See this case study, for example.

Configurable: Despite workflow variations, specific use cases share common architectural features. Organizational differences primarily lie in definitions, locations, entity names and relationships, which ParseQa encodes in domain and knowledge models that we call configurations.

ParseQa-CT and ParseQa-QAbot include data curation and QA configurations for a few document types including manuals, scientific papers, knowledge articles, and patents.

On-prem: The data essential for LLMs to fulfill enterprise knowledge requirements is among the most sensitive information within organizations. Understandably, many companies are uncomfortable with cloud-based solutions for such tasks. In addition to the solution being hosted on-premise, it is crucial to avoid sending organizational data to external models for specialized AI processing, as is common with many tools. ParseQas pragmatic use of small, private LLMs in conjunction with other task-specific agents for search, planning, validation and workflow management, establishes a platform that is not only secure and stable, but also cost-effective.

The ParseQa Advantage: Beyond the standard practice of training or fine-tuning on your data, ParseQa offers several distinctive features that set it apart from generic LLM solutions:

- PDF Parsing enhanced structural understanding: Some of the best RAG tools out there today use basic pdf-to-text conversion routines, leading to suboptimal retrieval and generation. The conversion process frequently results in the flattening of headings and tables. In contrast, ParseQa leverages structural elements such as heading hierarchies and lists to improve the extraction and search results. For example, where should a particular parameter value be extracted from? Or, where is the user stuck within a series of troubleshooting steps?

- Table Analysis & QA: We perform custom table parsing and extraction based on the specific kinds of tables in your documents. Table meta-data is used for standardization and interpretation of related elements. For tables where metadata is unavailable or parsing is unsuccessful, ParseQa also incorporates table-QA techniques to answer directly from raw tabular data.

- Image Understanding precise visual analysis: To enhance the capabilities of multimodal & image captioning models, we employ custom diagram segmentation, optical character recognition, and spatial information analysis. This enables us to more accurately interpret specific image types, such as cohort and sequence diagrams, flowcharts, and scientific illustrations.

- Answer Type-aware Extraction: Does the user need a full troubleshooting recipe, or is the expected output simply a list of integers? ParseQa extracts information based on expected answer types to deliver actionable results that can be directly used in downstream applications.

- Query Type-based Retrieval domain-optimized search results: The quality of answers from RAG systems depends substantially on the kind of text retrieved by vector search . While for casual use one could get by with any kind of embedding model, for high-precision applications it is important to match the model to the query type. ParseQa’s carefully designed query type-based retrieval (which includes techniques like query expansion and rewriting) ensures that the most relevant information is retrieved, leading to significantly improved answer quality.

- Knowledge-based configurations: All of the above choices structural-semantic relations, answer types, parameter categories and other metadata are managed through configurations specific to your use case, grounding all answers in your organizational knowledge.

Do you know your tables (and diagrams)?

Do you know your tables (and diagrams)?Conclusion: The secret, as ever, is that there is no secret.

While there may be no single, definitive secret to effective data curation and QA, we believe our approach offers a powerful combination of elements. By meticulously analyzing your documents and metadata, engaging in deep discussions about your domain knowledge, and leveraging cutting-edge models and technology, we strive to create the most effective solution possible today. AGI is imminent, they say. Until then, this is our best strategy!

Get in Touch

To schedule a demo and discuss how we can customize our solutions to meet your requirements, drop us a line at contact@inscripta.ai.