Research highlight: Pretrained Language Models + Language Fundamentals = Better Performance

An Embarrassingly Simple Method to Mitigate Undesirable Properties of Pretrained Language Model Tokenizers by Hofmann, Schutze and Pierrehumbert (University of Oxford, LMU Munich), ACL-2022

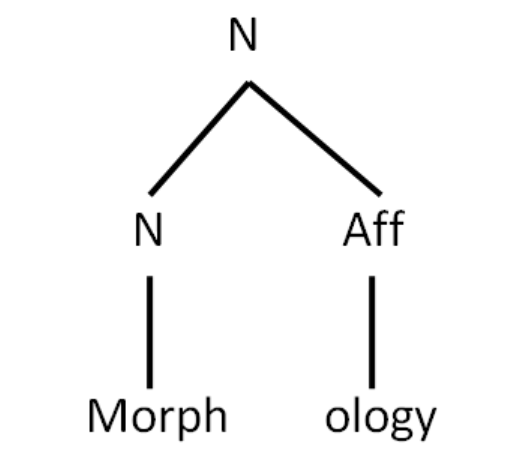

Language models (LMs) such as BERT and GPT-2 use tokenizers to split words into constituent segments or pieces. It has been noted that these tokenizers sometimes result in word segments that are meaningless (or at least, not as meaningful as they could be). For example, undesirable might be split as und-es-ira-ble, rather than, more meaningfully, as un-desir-able. This research paper shows that it is beneficial to preserve the morphological structure of words while tokenizing. The authors introduce a tokenization method called FLOTA (Few Longest Token Approximation) which results in more meaningful word segments and also improves the performance of BERT, GPT-2 and XLNet on a downstream text classification task.

This paper illustrates two ideas that we believe deserve more appreciation while applying NLP to business problems: (i) LMs like BERT can be improved in ways other than by scaling or indiscriminately throwing more data at them, through close attention to language data important to the domain or task (an idea we mention in our recent blog post) and (ii) Methods that were found useful in earlier NLP eras (rule-based and statistical/probabilistic modeling eras) still retain value in the Deep Learning era.

Get in Touch

To schedule a demo and discuss how we can customize our solutions to meet your requirements, drop us a line at contact@inscripta.ai.